Cluster information:

Kubernetes version:1.17.3

Cloud being used: bare-metal

Installation method: bare metal

Host OS: ubuntu 18.04

Hello Everyone, i have a problem with ftps-filezilla and Kubernetes for weeks.

CONTEXT :

I have a school project with Kubernetes and ftps.

I need to create a ftps server in kubernetes in the port 21, and it needs to run on alpine linux.

So i create an image of my ftps-alpine server using a docker container.

I test it, if it work properly on it own :

Using docker run --name test-alpine -itp 21:21 test_alpine

i have this output in filezilla :

| Status: | Connecting to 192.168.99.100:21… |

|---|---|

| Status: | Connection established, waiting for welcome message… |

| Status: | Initializing TLS… |

| Status: | Verifying certificate… |

| Status: | TLS connection established. |

| Status: | Logged in |

| Status: | Retrieving directory listing… |

| Status: | Calculating timezone offset of server… |

| Status: | Timezone offset of server is 0 seconds. |

| Status: | Directory listing of “/” successful |

It work sucessfully, filezilla see the file that is within my ftps directory

I am good for now(work on active mode).

PROBLEM :

So what i wanted, was to use my image in my kubernetes cluster(i use Minikube).

When i connect my docker image to an ingress-service-deployment in kubernetes i have that :

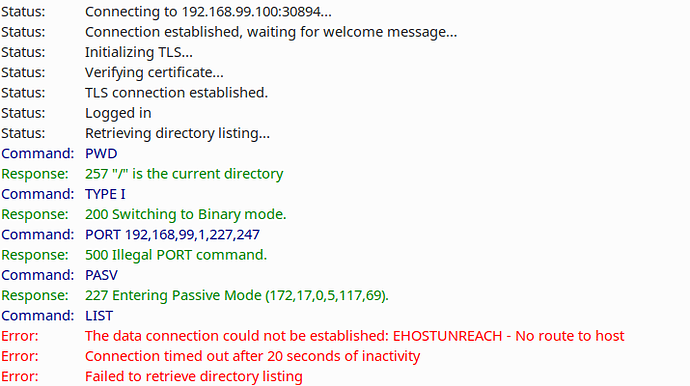

| Status: | Connecting to 192.168.99.100:30894… |

|---|---|

| Status: | Connection established, waiting for welcome message… |

| Status: | Initializing TLS… |

| Status: | Verifying certificate… |

| Status: | TLS connection established. |

| Status: | Logged in |

| Status: | Retrieving directory listing… |

| Command: | PWD |

| Response: | 257 “/” is the current directory |

| Command: | TYPE I |

| Response: | 200 Switching to Binary mode. |

| Command: | PORT 192,168,99,1,227,247 |

| Response: | 500 Illegal PORT command. |

| Command: | PASV |

| Response: | 227 Entering Passive Mode (172,17,0,5,117,69). |

| Command: | LIST |

| Error: | The data connection could not be established: EHOSTUNREACH - No route to host |

| Error: | Connection timed out after 20 seconds of inactivity |

| Error: | Failed to retrieve directory listing |

SETUP :

ingress.yaml :

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$1

namespace: default

name: ingress-controller

spec:

backend:

serviceName: my-nginx

servicePort: 80

backend:

serviceName: ftps-alpine

servicePort: 21

ftps-alpine.yml :

apiVersion: v1

kind: Service

metadata:

name: ftps-alpine

labels:

run: ftps-alpine

spec:

type: NodePort

ports:

- port: 21

targetPort: 21

protocol: TCP

name: ftp21 - port: 20

targetPort: 20

protocol: TCP

name: ftp20

selector:

run: ftps-alpine

apiVersion: apps/v1

kind: Deployment

metadata:

name: ftps-alpine

spec:

selector:

matchLabels:

run: ftps-alpine

replicas: 1

template:

metadata:

labels:

run: ftps-alpine

spec:

- name: ftps-alpine

image: test_alpine

imagePullPolicy: Never

ports:

- containerPort: 21

- containerPort: 20

WHAT DID I TRY :

- When i see the error message : Error: The data connection could not be established: EHOSTUNREACH - No route to host

google it and i see this message : https://stackoverflow.com/questions/31001017/ftp-in-passive-mode-ehostunreach-no-route-to-host .

And i already run my ftps server in active mode. - Change vsftpd.conf file and my service:

vsftpd.conf :

seccomp_sandbox=NO

pasv_promiscuous=NO

listen=NO

listen_ipv6=YES

anonymous_enable=NO

local_enable=YES

write_enable=YES

local_umask=022

dirmessage_enable=YES

use_localtime=YES

xferlog_enable=YES

connect_from_port_20=YES

chroot_local_user=YES

#secure_chroot_dir=/vsftpd/empty

pam_service_name=vsftpd

pasv_enable=YES

pasv_min_port=30020

pasv_max_port=30021

user_sub_token=$USER

local_root=/home/$USER/ftp

userlist_enable=YES

userlist_file=/etc/vsftpd.userlist

userlist_deny=NO

rsa_cert_file=/etc/ssl/private/vsftpd.pem

rsa_private_key_file=/etc/ssl/private/vsftpd.pem

ssl_enable=YES

allow_anon_ssl=NO

force_local_data_ssl=YES

force_local_logins_ssl=YES

ssl_tlsv1=YES

ssl_sslv2=NO

ssl_sslv3=NO

allow_writeable_chroot=YES

#listen_port=21

i did change my the nodeport of my kubernetes to 30020 and 30021 and i add them to containers ports.

i change the pasv min port and max port.

i add the pasv_adress of my minikube ip.

Nothing work .

Question :

How can i have the successfully first message but for my kubernetes cluster ?

If you have any questions to clarify, no problem.